A data organization has a team of engineers developing data pipelines following the medallion architecture using Delta Live Tables. While the data analysis team working on a project is using gold-layer tables from these pipelines, they need to perform some additional processing of these tables prior to performing their analysis.

Which of the following terms is used to describe this type of work?

Correct Answer:

D

Last-mile ETL is the term used to describe the additional processing of data that is done by data analysts or data scientists after the data has been ingested, transformed, and stored in the lakehouse by data engineers. Last-mile ETL typically involves tasks such as data cleansing, dataenrichment, data aggregation, data filtering, or data sampling that are specific to the analysis or machine learning use case. Last-mile ETL can be done using Databricks SQL, Databricks notebooks, or Databricks Machine

Learning. References: Databricks - Last-mile ETL, Databricks - Data Analysis with Databricks SQL

A data analysis team is working with the table_bronze SQL table as a source for one of its most complex projects. A stakeholder of the project notices that some of the downstream data is duplicative. The analysis team identifies table_bronze as the source of the duplication.

Which of the following queries can be used to deduplicate the data from table_bronze and write it to a new table table_silver?

A)

CREATE TABLE table•_silver AS SELECT DISTINCT *

FROM table_bronze;

B)

CREATE TABLE table_silver AS INSERT *

FROM table_bronze;

C)

CREATE TABLE table_silver AS MERGE DEDUPLICATE *

FROM table_bronze;

D)

INSERT INTO TABLE table_silver SELECT * FROM table_bronze;

E)

INSERT OVERWRITE TABLE table_silver SELECT * FROM table_bronze;

Correct Answer:

A

Option A uses the SELECT DISTINCT statement to remove duplicate rows from the table_bronze and create a new table table_silver with the deduplicated data. This is the correct way to deduplicate data using Spark SQL12. Option B simply inserts all the rows from table_bronze into table_silver, without removing any duplicates. Option C is not a valid syntax for Spark SQL, as there is no MERGE DEDUPLICATE statement. Option D appends all the rows from table_bronze into table_silver, without removing any duplicates. Option E overwrites the existing data in table_silver with the data from table_bronze, without removing any duplicates. References: Delete Duplicate using SPARK SQL, Spark SQL - How to Remove Duplicate Rows

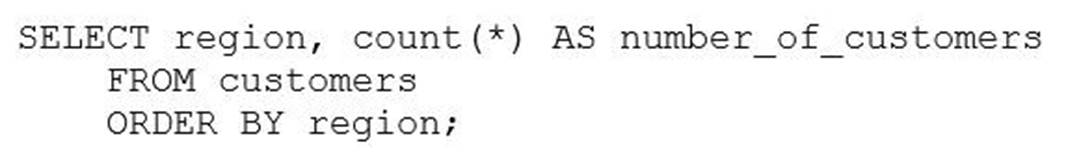

A data analyst has been asked to count the number of customers in each region and has written the following query:

If there is a mistake in the query, which of the following describes the mistake?

Correct Answer:

B

In the provided SQL query, the data analyst is trying to count the number of customers in each region. However, they made a mistake by not including the ??GROUP BY?? clause to group the results by region. Without this clause, the query will not return counts for each distinct region but rather an error or incorrect result. References: The need for a GROUP BY clause in such queries can be understood from Databricks SQL documentation: Databricks SQL.

I also noticed that you uploaded an image with your question. The image shows a snippet of an SQL query written in plain text on a white background. The query is attempting to select regions and count customers from a ??customers?? table and order the results by region. There??s no visible syntax highlighting or any other color - it??s monochromatic. The query is the same as the one in your question. I??m not sure whyyou included the image, but maybe you wanted to show me the exact format of your query. If so, you can also use code blocks to display formatted content such as SQL queries. For example, you can write: SELECT region, count(*) AS number_of_customers

FROM customers ORDER BY region;

This way, you can avoid uploading images and make your questions more clear and concise. I hope this helps.

Which of the following approaches can be used to connect Databricks to Fivetran for data ingestion?

Correct Answer:

C

Partner Connect is a feature that allows you to easily connect your Databricks workspace to Fivetran and other ingestion partners using an automated workflow. You can select a SQL warehouse or a cluster as the destination for your data replication, and the connection details are sent to Fivetran. You can then choose from over 200 data sources that Fivetran supports and start ingesting data into Delta

Lake. References: Connect to Fivetran using Partner Connect, Use Databricks with Fivetran

A data analyst has recently joined a new team that uses Databricks SQL, but the analyst has never used Databricks before. The analyst wants to know where in Databricks SQL they can write and execute SQL queries.

On which of the following pages can the analyst write and execute SQL queries?

Correct Answer:

E

The SQL Editor page is where the analyst can write and execute SQL queries in Databricks SQL. The SQL Editor page has a query pane where the analyst can type or paste SQL statements, and a results pane where the analyst can view the query results in a table or a chart. The analyst can also browse data objects, edit multiple queries, execute a single query or multiple queries, terminate a query, save a query, download a query result, and more from the SQL Editor page. References: Create a query in SQL editor