- (Exam Topic 2)

What should you recommend to prevent users outside the Litware on-premises network from accessing the analytical data store?

Correct Answer:

A

Virtual network rules are one firewall security feature that controls whether the database server for your single databases and elastic pool in Azure SQL Database or for your databases in SQL Data Warehouse accepts communications that are sent from particular subnets in virtual networks.

Server-level, not database-level: Each virtual network rule applies to your whole Azure SQL Database server, not just to one particular database on the server. In other words, virtual network rule applies at the serverlevel, not at the database-level.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-vnet-service-endpoint-rule-overview

- (Exam Topic 3)

You have an Azure Data Factory pipeline named pipeline1 that is invoked by a tumbling window trigger named Trigger1. Trigger1 has a recurrence of 60 minutes.

You need to ensure that pipeline1 will execute only if the previous execution completes successfully. How should you configure the self-dependency for Trigger1?

Correct Answer:

D

Tumbling window self-dependency properties

In scenarios where the trigger shouldn't proceed to the next window until the preceding window is successfully completed, build a self-dependency. A self-dependency trigger that's dependent on the success of earlier runs of itself within the preceding hour will have the properties indicated in the following code.

Example code:

"name": "DemoSelfDependency",

"properties": { "runtimeState": "Started", "pipeline": { "pipelineReference": { "referenceName": "Demo", "type": "PipelineReference"

}

},

"type": "TumblingWindowTrigger", "typeProperties": {

"frequency": "Hour", "interval": 1,

"startTime": "2018-10-04T00:00:00Z", "delay": "00:01:00",

"maxConcurrency": 50, "retryPolicy": { "intervalInSeconds": 30

},

"dependsOn": [

{

"type": "SelfDependencyTumblingWindowTriggerReference", "size": "01:00:00",

"offset": "-01:00:00"

}

]

}

}

}

Reference: https://docs.microsoft.com/en-us/azure/data-factory/tumbling-window-trigger-dependency

- (Exam Topic 3)

You have an Azure subscription.

You need to deploy an Azure Data Lake Storage Gen2 Premium account. The solution must meet the following requirements:

• Blobs that are older than 365 days must be deleted.

• Administrator efforts must be minimized.

• Costs must be minimized

What should you use? To answer, select the appropriate options in the answer area. NOTE Each correct selection is worth one point.

Solution:

https://learn.microsoft.com/en-us/azure/storage/blobs/premium-tier-for-data-lake-storage

Does this meet the goal?

Correct Answer:

A

- (Exam Topic 3)

You plan to monitor an Azure data factory by using the Monitor & Manage app.

You need to identify the status and duration of activities that reference a table in a source database.

Which three actions should you perform in sequence? To answer, move the actions from the list of actions to the answer are and arrange them in the correct order.

Solution:

Step 1: From the Data Factory authoring UI, generate a user property for Source on all activities. Step 2: From the Data Factory monitoring app, add the Source user property to Activity Runs table.

You can promote any pipeline activity property as a user property so that it becomes an entity that you can

monitor. For example, you can promote the Source and Destination properties of the copy activity in your pipeline as user properties. You can also select Auto Generate to generate the Source and Destination user properties for a copy activity.

Step 3: From the Data Factory authoring UI, publish the pipelines

Publish output data to data stores such as Azure SQL Data Warehouse for business intelligence (BI) applications to consume.

References:

https://docs.microsoft.com/en-us/azure/data-factory/monitor-visually

Does this meet the goal?

Correct Answer:

A

- (Exam Topic 3)

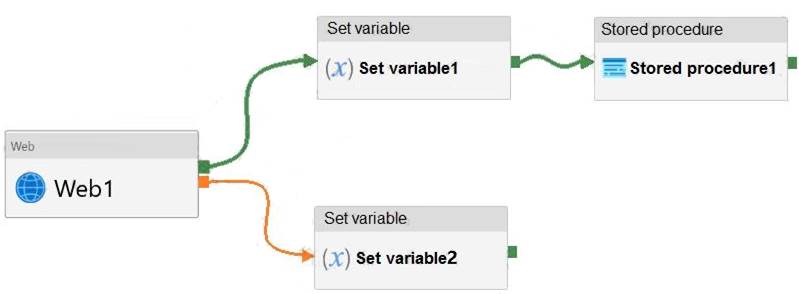

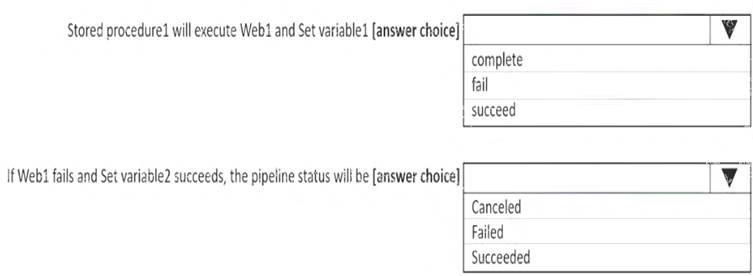

You have an Azure Data Factory pipeline that has the activities shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Solution:

Box 1: succeed

Box 2: failed Example:

Now let’s say we have a pipeline with 3 activities, where Activity1 has a success path to Activity2 and a failure path to Activity3. If Activity1 fails and Activity3 succeeds, the pipeline will fail. The presence of the success path alongside the failure path changes the outcome reported by the pipeline, even though the activity executions from the pipeline are the same as the previous scenario.

Activity1 fails, Activity2 is skipped, and Activity3 succeeds. The pipeline reports failure. Reference:

https://datasavvy.me/2021/02/18/azure-data-factory-activity-failures-and-pipeline-outcomes/

Does this meet the goal?

Correct Answer:

A