- (Exam Topic 3)

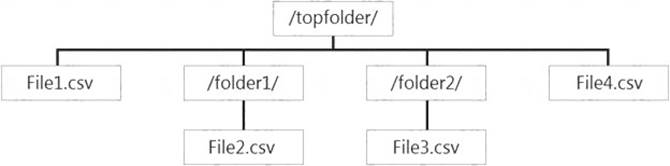

You have files and folders in Azure Data Lake Storage Gen2 for an Azure Synapse workspace as shown in the following exhibit.

You create an external table named ExtTable that has LOCATION='/topfolder/'.

When you query ExtTable by using an Azure Synapse Analytics serverless SQL pool, which files are returned?

Correct Answer:

B

To run a T-SQL query over a set of files within a folder or set of folders while treating them as a single entity or rowset, provide a path to a folder or a pattern (using wildcards) over a set of files or folders. Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/query-data-storage#query-multiple-files-or-folders

- (Exam Topic 3)

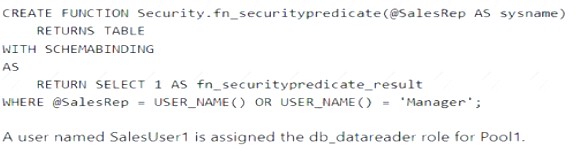

You have an Azure Synapse Analytics dedicated SQL pool named Pool1 that contains a table named Sales. Sales has row-level security (RLS) applied. RLS uses the following predicate filter.

A user named SalesUser1 is assigned the db_datareader role for Pool1. Which rows in the Sales table are returned when SalesUser1 queries the table?

Correct Answer:

A

- (Exam Topic 3)

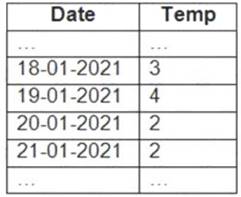

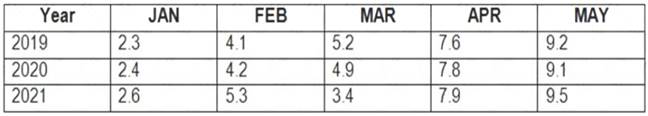

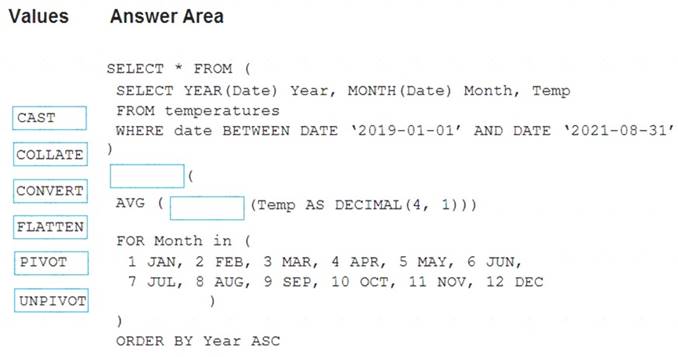

You have an Apache Spark DataFrame named temperatures. A sample of the data is shown in the following table.

You need to produce the following table by using a Spark SQL query.

How should you complete the query? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Solution:

Text Description automatically generated

Box 1: PIVOT

PIVOT rotates a table-valued expression by turning the unique values from one column in the expression into multiple columns in the output. And PIVOT runs aggregations where they're required on any remaining column values that are wanted in the final output.

Reference:

https://learnsql.com/cookbook/how-to-convert-an-integer-to-a-decimal-in-sql-server/ https://docs.microsoft.com/en-us/sql/t-sql/queries/from-using-pivot-and-unpivot

Does this meet the goal?

Correct Answer:

A

- (Exam Topic 3)

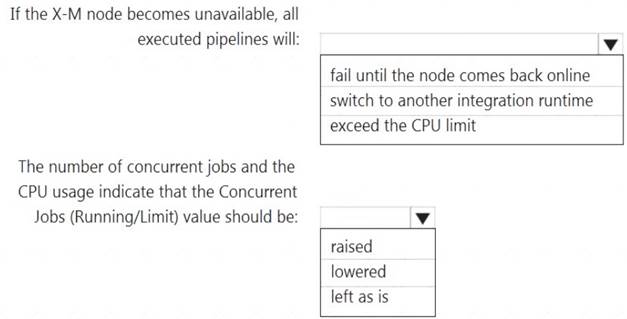

You have a self-hosted integration runtime in Azure Data Factory.

The current status of the integration runtime has the following configurations:  Status: Running

Status: Running Type: Self-Hosted

Type: Self-Hosted  Version: 4.4.7292.1

Version: 4.4.7292.1 Running / Registered Node(s): 1/1

Running / Registered Node(s): 1/1  High Availability Enabled: False

High Availability Enabled: False Linked Count: 0

Linked Count: 0 Queue Length: 0

Queue Length: 0 Average Queue Duration. 0.00s

Average Queue Duration. 0.00s

The integration runtime has the following node details:  Name: X-M

Name: X-M Status: Running

Status: Running Version: 4.4.7292.1

Version: 4.4.7292.1 Available Memory: 7697MB

Available Memory: 7697MB  CPU Utilization: 6%

CPU Utilization: 6% Network (In/Out): 1.21KBps/0.83KBps

Network (In/Out): 1.21KBps/0.83KBps  Concurrent Jobs (Running/Limit): 2/14

Concurrent Jobs (Running/Limit): 2/14  Role: Dispatcher/Worker

Role: Dispatcher/Worker Credential Status: In Sync

Credential Status: In Sync

Use the drop-down menus to select the answer choice that completes each statement based on the information presented.

NOTE: Each correct selection is worth one point.

Solution:

Box 1: fail until the node comes back online We see: High Availability Enabled: False

Note: Higher availability of the self-hosted integration runtime so that it's no longer the single point of failure in your big data solution or cloud data integration with Data Factory.

Box 2: lowered We see:

Concurrent Jobs (Running/Limit): 2/14 CPU Utilization: 6%

Note: When the processor and available RAM aren't well utilized, but the execution of concurrent jobs reaches a node's limits, scale up by increasing the number of concurrent jobs that a node can run

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime

Does this meet the goal?

Correct Answer:

A

- (Exam Topic 3)

You need to trigger an Azure Data Factory pipeline when a file arrives in an Azure Data Lake Storage Gen2 container.

Which resource provider should you enable?

Correct Answer:

C

Event-driven architecture (EDA) is a common data integration pattern that involves production, detection, consumption, and reaction to events. Data integration scenarios often require Data Factory customers to trigger pipelines based on events happening in storage account, such as the arrival or deletion of a file in Azure Blob Storage account. Data Factory natively integrates with Azure Event Grid, which lets you trigger pipelines on such events.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/how-to-create-event-trigger https://docs.microsoft.com/en-us/azure/data-factory/concepts-pipeline-execution-triggers